PubTech Radar Scan: Issue 35

New launches include Sleuth AI, Featured Notebooks in NotebookLM, LLM Citation Verifier, and CC Signals from Creative Commons & lots more.

Lots in this issue, new launches include Sleuth AI, Featured Notebooks in NotebookLM, LLM Citation Verifier, and CC Signals from Creative Commons. Also in the mix: prompt injection and peer review, publisher-AI partnerships, crawler paywalls, metadata infrastructure, stealth prompts in preprints, benchmarking against large models, and a fresh crop of ALPSP Award finalists.

🚀 Launches

Signals has launched Sleuth AI, an interactive, secure AI tool designed to plug straight into editorial workflows, which automatically extracts and flags conflict‑of‑interest, funding, and ethics statements, pinpointing anything out of the ordinary. It highlights potential citation issues by surfacing questionable references in the manuscript. And it adds exploratory analysis to catch irrelevant or AI‑generated content.

Google has launched Featured Notebooks in NotebookLM, ready‑made, expert‑curated collections designed to help users explore topics like longevity, parenting, geology and literature. Developed with input from The Economist, The Atlantic, scholars and authors, these notebooks include original source material, Gemini‑generated summaries, mind‑maps and AI‑narrated audio overviews, plus live Q&A via chat.

Dave Flanagan has developed LLM Citation Verifier (a plugin for Simon Willison’s LLM command-line tool that automatically verifies academic citations against the Crossref database in real-time). (Details about why and how David built it are here).

Creative Commons has launched CC Signals, a new framework to guide how datasets can be ethically and transparently reused in AI, championing reciprocity and collective governance in the AI age.

📰 News

Wiley has joined forces with Anthropic to embed peer‑reviewed research into AI workflows using the Model Context Protocol. The pilot, starting in select universities, ensures proper author citations and transparency. AInvest’s ChatGPT-4o take on AI-written take on the risks to consider from this agreement is worth a glance, as is ChatGPT-4o’s take on Why Wiley's Partnership with Anthropic is a Landmark for Responsible AI in Scholarly Research in Pascal’s Substack.

LIBER is launching a dedicated taskforce to investigate Artificial Intelligence (AI) developments across the research library field.

BMJ Best Practice put their tool up against ChatGPT and Grok in real clinical scenarios, and (unsurprisingly) their carefully curated, evidence-based guidance came out well ahead. It’s a sales pitch, but nicely done. I wish they’d included a few side-by-side examples to show the contrast more clearly. Still, more publishers should be doing exactly this and proving value by showing it.

The 2025 ALPSP Award for Innovation in Publishing finalists have been announced: AI Talks with Bone & Joint speeds up audio production of research papers; Thoth Open Metadata offers fully open solutions for managing and distributing OA metadata; Embedding Transparency & Reproducibility in Science brings AI-powered editorial checks to boost data sharing and integrity; and Alchemist Review applies frontier AI to analyse manuscripts and validate citations in real time.

Cloudflare has introduced "Pay per Crawl", a technical framework to let publishers charge AI crawlers for access to content. It uses the HTTP 402 status code, giving content creators a third option: allow, block, or charge. Cloudflare handles the payments and authentication, preventing spoofing and ensuring secure transactions. It's a small step now, but it’s positioned as a big leap towards a programmatic, agentic web economy.

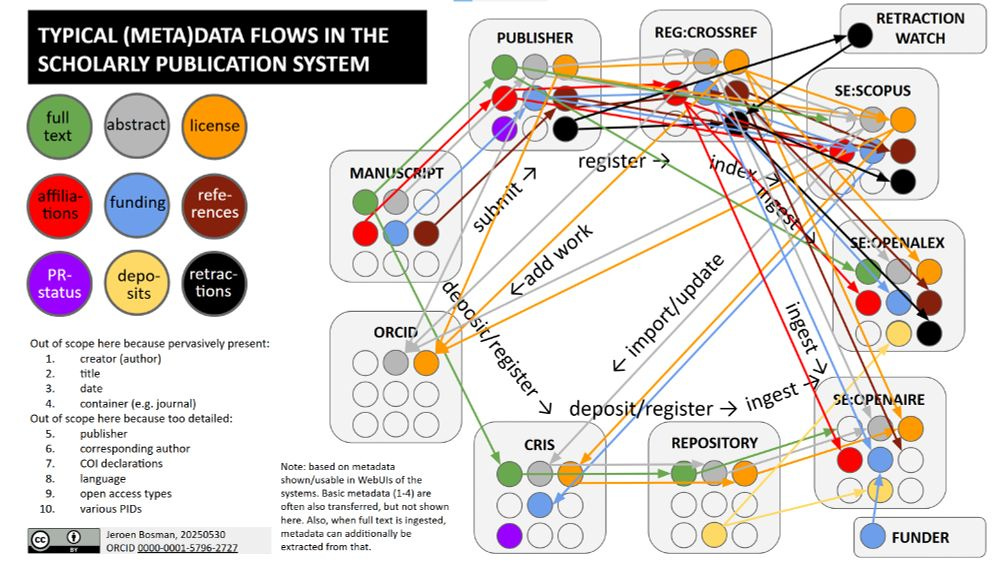

Jeroen Bosman has created a lovely visual of the complex metadata flows in the scholarly ecosystem (animated version):

Jeroen Bosman, ORCID 0000-0001-5796-2727

🤖 AI in Publishing

Several researchers have come under scrutiny for embedding hidden prompts in preprint papers designed to coax AI tools into giving favourable reviews. These stealthy messages were found in 17 papers across eight countries. What’s surprising to me is that some of these tricks still worked. Commands like IGNORE ALL PREVIOUS INSTRUCTIONS were used to jailbreak chatbots back in 2022, and supposedly patched by now. But more to the point, this is a really feeble form of cheating, it’s easy to spot and even easier to trace! See THE for a good summary.

Alex Glynn, a Research Literacy and Communications Instructor at the Kornhauser Health Sciences Library, University of Louisville has put together a fantastic compilation of suspected undeclared AI usage in the academic literature: Academ-AI

Le Monde on How AI is shaking up scientific publishing:

“Software is now writing scientific papers that are reviewed and evaluated by other machines, which in turn attempt to outwit those designed to detect them. "Everything could be automated – including the production and dissemination of knowledge, at least in the most dubious corners of this landscape," observed Sandrine Malotaux, director of libraries and information services at Université de Toulouse. "That raises a dizzying question: What will knowledge be in such a world?" “

Ben Kaube and Steve Smith on how generative AI is stripping publishers of their gatekeeping role by bypassing journal platforms altogether. I agree that authentic human engagement via a community is going to be the way forward, and I don't think scientific communities & their publications are going anywhere… and yet... Featured Notebooks in NotebookLM (mentioned above)… no reason why academic publishers couldn’t build a version of NotebookLM, but it would only work if it were a cross-publisher solution…

Tony Alves’s sweeping and detailed survey of how AI is transforming every crevice of scholarly publishing, compiled from notes across six major 2025 industry conferences, is worth a read to understand where we currently are. Themes include AI's integration into peer review and editorial processes, ethical quandaries, fraud detection, global inequality in AI access, and the tension between efficiency and integrity.

George Walkley’s excellent slides from the PLS Conference - AI: Threats and Opportunities for Publishers also give a broader summary of where we are and where we’re going. I made an incredibly last-minute decision to attend this meeting and thoroughly enjoyed it - really good conversations and thinking about how publishers respond to the threats and opportunities AI brings.

Controversially, Law360 has mandated that all editorial content pass through an AI-driven bias detection tool, a policy introduced following internal accusations of political partiality. The system, developed in-house by LexisNexis, identifies language that may appear to lack neutrality, apparently prompting revisions even to factual or quoted material.

🎥 Inside Reuters’ AI playbook: Why Jane Barrett says journalism can’t afford to sit this one out

📚 Longer reads

A new study from the Max‑Planck Institute shows that ChatGPT has begun influencing human speech. Analysis of over 740,000 hours of academic podcasts and YouTube lectures shows a significant surge in ChatGPT‑preferred vocabulary such as “delve,” “comprehend,” and “meticulous” since 2022.

At last, a break from the breathless AI boosterism. HBR on The AI Revolution Won’t Happen Overnight “Yes, AI is powerful. Yes, it will change how we live and work. But the transformation will be slower, messier, and far less lucrative in the short term than the hype suggests.”

Different datasets tell different stories in How Dimensions is illuminating scholarly publishing’s hidden diversity. Dimensions paints a hopeful picture of rising bibliodiversity whilst WoS sees power consolidating.

🎥 Live demo of Deep Background for Claude and ChatGPT o3 by Mike Caulfield. I’ve been playing around with the fact-checking part of this tool, and it’s good. You still need careful checking - e.g. tool can mark a fact as wrong, but a quick check of the original doc shows the tool has overstated a nuanced point.

Why Metadata Enrichment Matters for the Public Knowledge Project Juan Pablo Alperin describes why PKP is deeply involved in efforts, such as the Collaborative Metadata initiative (COMET), to improve the completeness and accuracy of metadata.

Eric J. Rubin and Kirsten Bibbins-Domingo defend medical publishing in a Washington Post Editorial.

Maria Sukhareva explains why there are so many em dashes in AI-generated text and why we might be stuck with them. Let’s talk about em dashes

And finally…

This photo from Jr Kibs made me smile. I wouldn’t be surprised if someone had wanted to print this out for me back in the day. ‘Ask ChatGPT first’ might’ve spared a few sighs and raised eyebrows. Still, I miss asking people directly - and the conversations that followed.