PubTech Radar Scan: Issue 29

Alchemist Review, zero-click journeys, Disentis Roadmap, new IOI Fund, openRxiv, CCC AI License, EasyJournal, AI Scientist-v2, YesNoError, readers prefer AI-generated newsletter headlines, & more...

News, New to Me & Launches

🚀 Hum and GroundedAI launched Alchemist Review, an AI-Driven Peer Review Solution. Now being tested by select journals, it aims to tackle the age-old publishing woes of reviewer fatigue and snail-paced turnaround times. See also: Ann Michael introduces the Peer Review Assistant (PRA). [More to come on this in a future issue!]

📉 The launch of Google’s AI-powered search summaries has led to a 19% drop in click-through to academic reference services according to OUP product strategy director John Campbell. "Discussing what he called the advent of “zero-click journeys” for academic publishers, Campbell explained how half of all Google keyword searches likely to surface information within OUP’s own platform, Oxford Academic, now appeared with an AI-generated description next to them." [H/T: MediaMorph]

🚀 The Disentis Roadmap, an ambitious 10-year plan to unlock research data currently buried in scientific papers. By 2035, the initiative aims to create a ‘Libroscope’—a pioneering system that will make biodiversity knowledge freely accessible, AI-ready, and fit for purpose in science, policy, and conservation.

💰Invest in Open Infrastructure (IOI) has launched its call for proposals for the IOI Fund for Network Adoption. With support from major philanthropic organisations, the fund aims to boost open data and content infrastructure across Africa, Latin America, and North America.

🗂️ openRxiv is the newly launched nonprofit home for bioRxiv and medRxiv, aiming to ensure that life and health science preprints remain freely accessible and researcher-led. Science article.

🚀 CCC will launch a new AI Systems Training License in late 2025 to help organisations legally use third-party content in AI training and output.

🛠️ EasyJournal, an experimental open-source prototype journal platform developed by Adam Hyde, is looking for early testers. More about the experiment.

Peer review

🤖 Japanese startup Sakana claims its AI tool, The AI Scientist-v2, successfully generated a scientific paper that passed peer review at an ICLR workshop. However, the paper, which was later withdrawn, contained “embarrassing” citation errors, such as wrongly attributing a method to a 2016 paper instead of the original 1997 source. In a candid blog post, the company also admitted that none of its AI-generated studies met the internal standards required for submission to ICLR’s main conference track. [TechCrunch story]

🔥I think YesNoError sounds like an interesting experiment but I also like Carl T. Bergstrom’s Bluesky post about the writeup in Nature, “Is everyone huffing paint? Crypto guy claims to have built an LLM-based tool to detect errors in research papers; funded using its own cryptocurrency; will let coin holders choose what papers to go after; it's unvetted and a total black box—and Nature reports it as if it's a new protein structure.”

🐶 Neil Blair Christensen is shamelessly resorting to adorable dog pics to nudge folks in peer review and editorial circles to take a 1-minute survey on publication integrity. Survey questions I Dog pic

AI

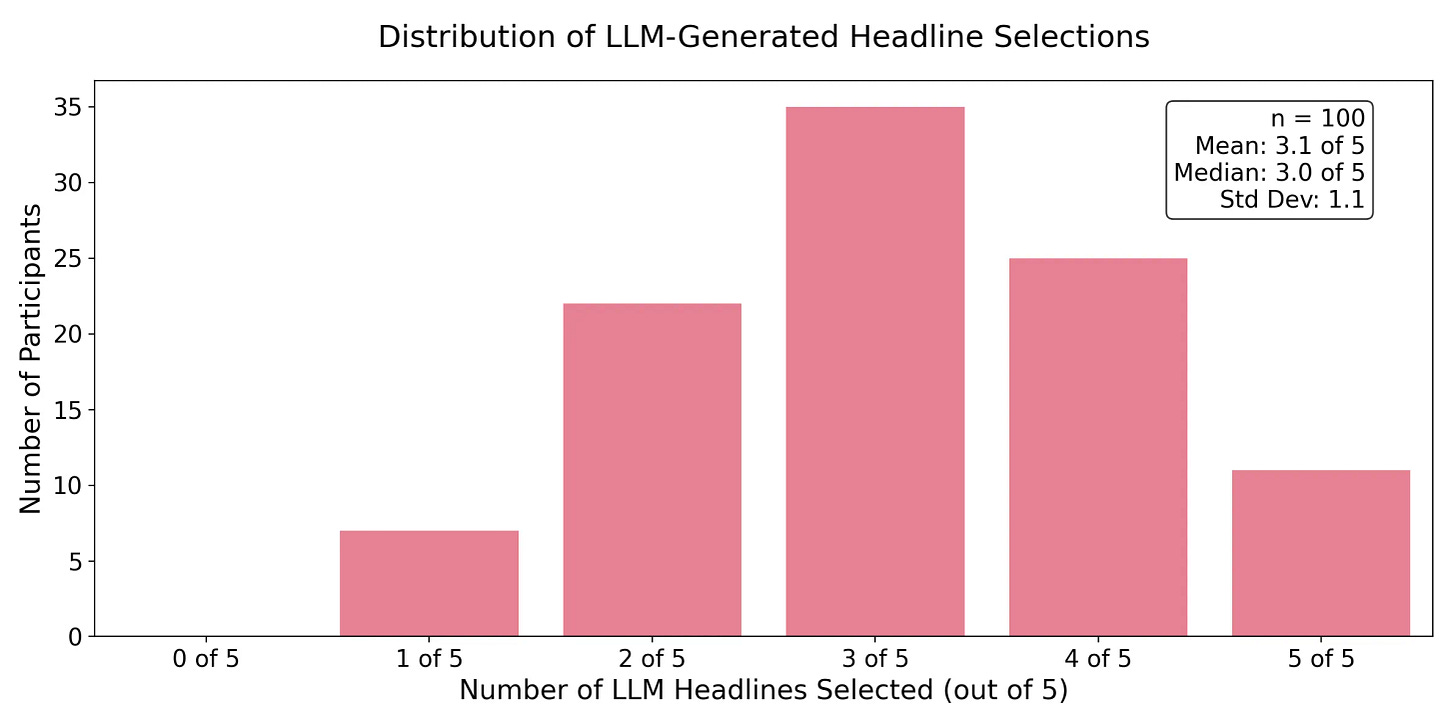

📰 A controlled study by Nick Hagar investigated whether, based on someone’s reading history, an LLM could adapt the content for the newsletter in an engaging way. Results, readers preferred AI-generated newsletter headlines 62% of the time.

👎No great surprises here, but useful to have the data: a Tow Center study shows that generative AI search tools often struggle with citing news accurately. Even platforms with licensing deals don’t show much improvement when it comes to giving credit where it’s due.

🔧A new report from FT Strategies in partnership with the Google News Initiative, guides publishers through evaluating cost, feasibility, risk, and strategic alignment when facing the “Build or Buy” dilemma. It’s aimed at News publishers but the helpful concluding questions to ask yourself as you consider AI deployment apply to most companies.

🧪 A quick project by Ian Mulvaney using AI tools like NotebookLM, DeepSeek, and Claude to highlight M&A activity in scholarly publishing from 2021–2025. Drawing on ~30 sources (including Clarke & Esposito's newsletter), it efficiently produced a visual snapshot of industry consolidation.

📈 New Adobe research shows a 1,200% jump in AI search referrals to retail sites in 2024–25. Shoppers are turning to tools like Perplexity, ChatGPT, and Google AI for product research (55%) and shopping (39%). Bain Generative AI Consumer Survey says that about 80% of search users rely on AI summaries at least 40% of the time.

Longer reads

🔖AI isn’t making intelligence ubiquitous—it’s commoditising expertise. Tim O’Reilly argues that true intelligence lies in creativity, values & taste: the ability to see what others miss and make meaningful choices.

🔖I finally read Geoffrey Bilder’s On AI from 2023. His call to stop calling it "AI" may have already been lost! He argues metadata & context—not flawed AI detection—are key to research integrity. In 2025, we’re seeing more context-based approaches coming from start-ups and other organizations, but will any of them be community-driven?

🔖 Molly White’s “Wait, not like that”: Free and open access in the age of generative AI explores how generative AI challenges the free and open web—and how restrictive responses could do more harm than good. Could solutions like fair compensation, ethical AI use, and sustainable frameworks protect open knowledge? Maybe… Hopefully…?

🔖 Bergstrom & West deliver a succinct and measured 'lesson' on AI in science - covering protein design breakthroughs, Sakana AI's $15 AI-written papers, and peer review by LLM. A thoughtful take on where AI helps, and where it’s just hype.

Events

📅 AI Publishing Collective meetup in London, keep 29 April and 14 May free in your diary! Join the group on LinkedIn for updates.

📅 BCS Knowledge Discovery & Data Mining series on May 14 (6-7:30 PM BST) has 2 talks by Anna Fensel on knowledge graphs, FAIR principles, and generative AI in agri-food, and Anelia Kurteva on responsible AI through data governance that might be of interest. More details & Zoom link (website is http and flags up a security warning, zoom link for the event with no registration needed is https://ukkdd.org.uk/2025/?section=registration).

📅 AI and the Future of News 2025, 26th March 2025, A one-day conference with speakers from journalism and academia. Free and online.

And finally…

❤️ I absolutely love this from the University of York Library on Bluesky: “Not that we in the library wish to encourage profanity, but if you want to get rid of these AI-search summaries (which are often absolute nonsense), just swear in your search terms.”