PubTech Radar Scan: Issue 23

Things that have caught my attention over the last couple of weeks include a new Booktokers community, Argos retraction data service, DeSci Lab’s novelty score rankings, Sylla, Primo’s Research Assistant and a variety of other chatbot/RAG sevices.

🚀 News, New & Newish things

Sam Missingham has launched MeetTheBooktokers.com, a global community for booktokers, authors and publishers.

Argos from an ex-Springer Nature team aims to provide a comprehensive retraction service to uphold the highest standards of scientific integrity “With daily updates, personalized retraction alerts, and seamless integration into workflows via an API, Argos swiftly identifies retracted articles and flags areas of concern.”

Via DeSci Labs you can create ad-hoc novelty score rankings by searching for authors, topics, journals, or institutions. Using a fairly random search designed to get articles with different scores it gives curiously interesting results. I’m not too sure how to use or how to evaluate if the results are really meaningful (I am not their target audience) but it’s fun to play around with.

Tom Mosterd, Max Mosterd, Sam Eerdmans and Dennis Stander have developed Sylla to match university syllabi with literature that is open access or licensed by a university.

Good news for The Public Knowledge Project (PKP) their Open Journal Systems (OJS) software has been chosen as the platform for the European Commission’s Open Research Europe (ORE).

Chris Leonard’s Wonderful Bullshit Journal Generator is fun but far too realistic!

🤖 RAG & Chatbots

Ex Libris Primo Research Assistant is now available for Primo institutions. Aaran Tay’s AI & Retrieval augmented generation search - the content problem -reactions from librarians, authors and publishers & thoughts on tradeoffs is a must read. One question Aaran considers is, should publishers should allow their content to be used to generate answers in RAG systems?

“My first thought is publishers wanting to opt out are shooting themselves in the foot. They are making the same mistake as publishers first refusing to be indexed in Google Scholar and later the central discovery indexes by Summon, Primo, EDS in the early years of 2010s. In the end, pretty much everyone gave in, because if you were not discoverable in these indexes and your competitors were, you would just lose out and would be less discoverable and hence less used.”

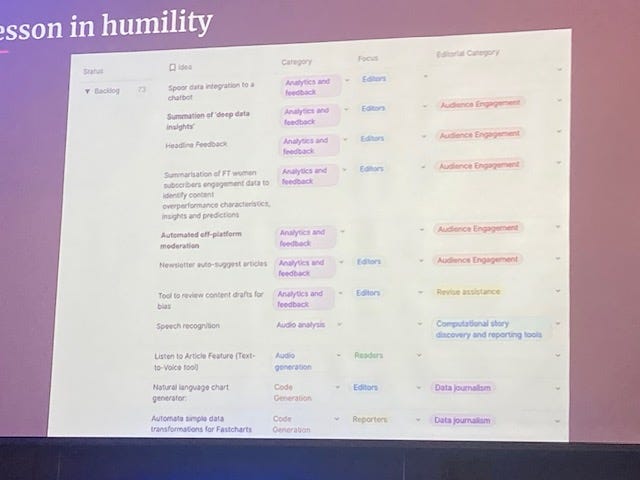

I also see the parallels with the early years of search but I think publishers should be cautious here. Search took the content, indexed it and linked to it. GenAI chatbots take the content and can combine it with other information to turn it into new content which is, potentially, more useful. RAG searches sit somewhere in the middle. How do we attribue usage back to an article when 10% of a chatbot answer might have come from an article? Will this type of usage/attribution model make sense in the future? (COUNTER GenAI FAQs here).Climate Answers, a new experimental chatbot from the Washinton Post which launched in July, uses their reporting to answer questions about climate change, the environment, sustainable energy, etc. If the chatbot doesn’t know the answer it says rather than making something up. At the recent Future of Media Technology Conference a number of the larger [not academic] publishers mentioned that they had these kinds of chatbots running internally but were unwilling to make them public because of concerns about potential brand damage given that they’re in the business of providing trusted information. As the Financial Times (FT) put it “Hallucinations are a bug in media and a feature in LLMs”. I was impressed by a video of an experimental application for FT journalists which combined FT content, preset system prompts and various checkbox options to help journalists write better prompts. Is it better to train everyone in prompt engineering or to write an application that makes it super easy to write complex prompts for common tasks? They also put up a fascinating screenshot of part of their GenAI ideas backlog as part of lessons learned session:

📚 Reading list

I don’t usually read memoirs but enjoyed Lindsay Nicholson’s Perfect Bound. The former editor of Good Housekeeping magazine talks about workalohism, trauma, loss and the pressures of maintaining a perfect image in the magazine industry. I don’t think we talk about the addictive nature of magazine/journal publishing enough. If you’re currently working in publishing Arthur C. Brooks on Success Machines/work addiction touches on similar themes and is probably going to resonate because the teamwork and resulting dopamine hit that comes from successfully getting another issues out of the door is addictive.

Eryk Salvaggio’s Challenging The Myths of Generative AI is a long read about how metaphor is being used/misused to help us think about what AI is and what it will do.

Exploring AI by Lori Carlin from Delta Think.

Maanas Mediratta on AI transformation for digital publishers [Part 2] - Is your content even ready for AI?

GenAI Dataset Curation and Robots.txt by David Atkinson

[Press release] CCC Pioneers Collective Licensing Solution for Content Usage in Internal AI Systems

🚲 And finally…

I am taking a bit of a break and heading off on the Brompton for an adventure/digital nomad job hunt. Probably best to message me via LinkedIn if you want to get in touch!