PubTech Radar Scan: Issue 22

Things that have caught my attention over the last couple of weeks include an AI powered peer review tool called Eliza, Fair Connect search and dashboard, Tapla Search, Research Kick Chat, CP2A, the ‘tizzy’ around GenAI in scientific writing, Sakana’s AI Scientist, Shared v Open infrastructure, Academic Fracking, Chief Process Officers, how News publishers are using GenAI, and 67 Bricks on Product Development.

🚀 Launches

World Brain Scholar: launched Eliza an AI-powered peer review tool to speed up and enhance the quality of peer reviews, helping reviewers and editors make faster, informed decisions.

The Fair Connect Team has launched a new prototype search engine and dashboard to help the FAIR-Data stewardship community find and reuse FAIR Supporting Resources.

Talpa Search by LibraryThing: Allows you to find books using natural language based on plot details, genre, and cover visuals, including color and imagery.

Research Kick Chat: Launched by Mushtaq Bilal and Minh-Phuc Tran this AI app integrates various AI models with research databases like Semantic Scholar, PubMed, and Scite, allowing users to create customized AI-powered assistants for academic tasks.

🤖 AI

CP2A: The Coalition for Content Provenance and Authenticity (C2PA) is an “open technical standard providing publishers, creators, and consumers the ability to trace the origin of different types of media”. I think this is important and something researchers and academic publishing need to look at adopting. The overview video is a good intro into why this is needed and the approach (H/T: Kaveh, River Valley Technologies who is already thinking about how to adopt this and how to use the standard to help with research integrity issues in images)

Diana Kwon from Nature News asks in what circumstances should GenAI be allowed in scientific writing? I like this quote: “Everybody’s worried about everybody else using these systems, and they’re worried about themselves not using them when they should,” says Debora Weber-Wulff, a plagiarism specialist at the University of Applied Sciences Berlin. “Everybody’s kind of in a tizzy about this.” Tizzy is such a great word for much of the discussion about using GenAI in scientific writing, especially when services like Sakana’s AI Scientist are just around the corner. More evidence that people and AI can’t accurately detect well-prompted AI writing in this paper.

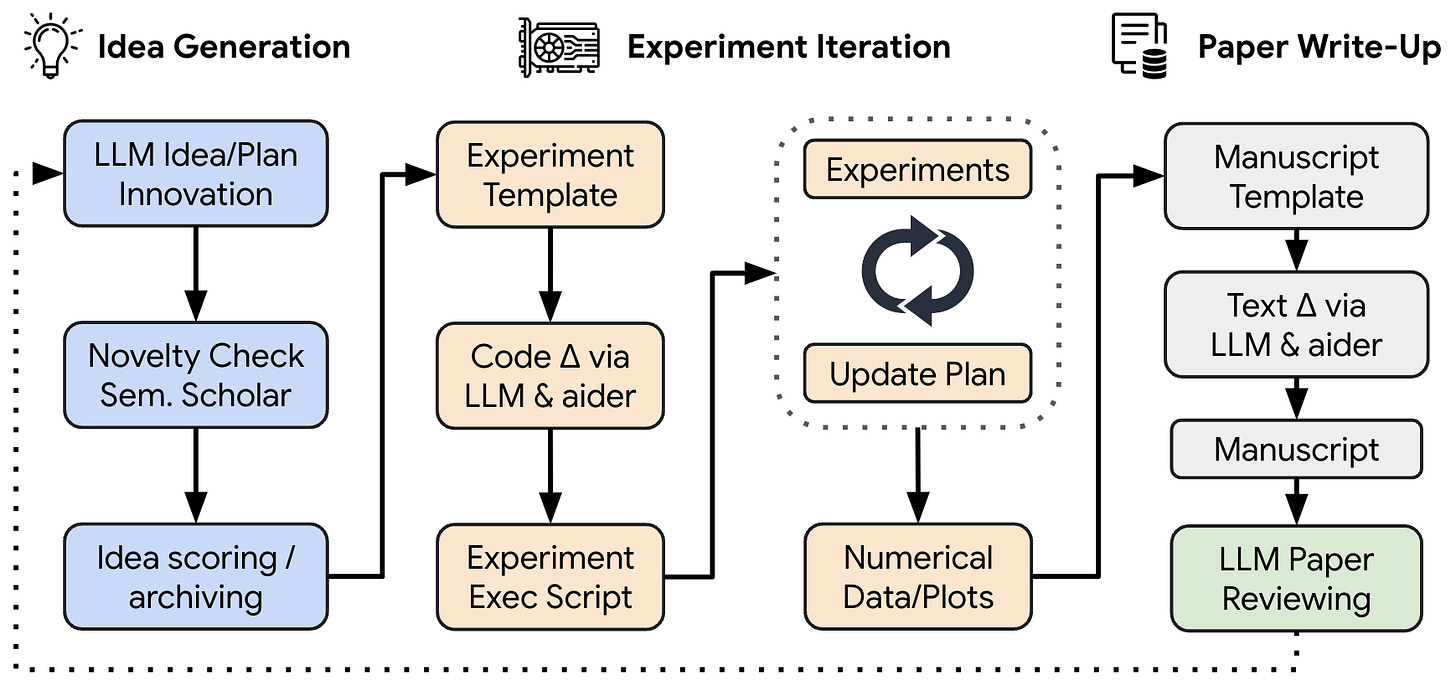

AI Scientist: The AI Scientist: Towards Fully Automated Open-Ended Scientific Discovery “The AI Scientist first brainstorms a set of ideas and then evaluates their novelty. Next, it edits a codebase powered by recent advances in automated code generation to implement the novel algorithms. The Scientist then runs experiments to gather results consisting of both numerical data and visual summaries. It crafts a scientific report, explaining and contextualizing the results. Finally, the AI Scientist generates an automated peer review based on top-tier machine learning conference standards. This review helps refine the current project and informs future generations of open-ended ideation.” I think the publishing community needs to think much more about these kinds of approaches and how they can support associated research communities.

🔍 AI and Peer review

Springer Nature’s Approach: Markus Kaindl, Director of Content Innovation at Springer Nature discusses how AI can assist reviewers by providing initial drafts, making the review process more effective and rewarding.

I agree with Ashutosh Ghildiyal that “The essence of scholarly publishing lies in peer review, and the essence of peer review is human attention” but I also think AI review will become a huge part of *some* fields/types of academic publishing. Most publisher processes currently look something like this:

Submission > Largely human triage/screening/desk rejects > Human peer review possibly with a small amount of AI assistance

I think we’re a couple of years away from a spectrum of approaches for triage and peer review which will include:

100% AI peer review > Human in the loop but largely AI peer review > AI-assisted human review > 100% human review

I don’t think human review is going to disappear. There’s a big difference between the kind of peer review done, for example, on The BMJ’s articles to improve clarity for readers, and the often more templated approach to peer-reviewing a sound science paper. (Whilst both approaches could be done by AI, I think most research communities will continue to place a higher value on human reviews than machine-generated reviews).

📚 Longer reads

Shared infrastructure ≠ Open infrastructure: Geoffrey Bilder reflects on the history of Principles of Open Scholarly Infrastructure (POSI) makes some thoughtful comments about the shared/closed nature of research integrity infrastructure and notes most research integrity infrastructure is shared not open. I think the management of research integrity infrastructure should be getting more attention from researchers than it is.

Academic Fracking: I don’t agree with all the sentiments in Lance Eaton’s article, Academic Fracking: When Publishers Sell Scholars Work to AI, but “academic fracking” is a great term. The article reminds me of Steve Miller Band’s “Take the Money and Run.” To be clear publishers aren’t committing any crimes here, but if you were offered large sums of money for your content (content that the AI companies have already taken), and you suspect some of the companies offering this money might not last 18 months, wouldn’t you take the money and run?

Chief Process Officers: Sarah Burnett on Beyond Efficiency: The Role of a Chief Process Officer in Strategic Success. Many publishers already have similar roles but at lower levels within the company. I think these types of roles will be key for rethinking workflows and integrating AI technologies.

News Publishing and GenAI: Maanas Mediratta writes about how news publishers are using Gen AI technologies for chatbots and polls

Product Development: The latest issue of 67 Bricks’s newsletter on ‘'prescriptive intelligence' is a great read for anyone interested in digital products with articles on product mindset to unlock growth, smooth product launches, and a rather interesting new Product Data Maturity Map

📅 Topical

BMJ Group and Karger Publishers have joined forces to present the Vesalius Innovation Award for innovation in science communication. Deadline fast approaching!

Have your say on key issues as COUNTER develops by joining one of their new focus groups

Join Karim B. Boughida, Paul Groth, Helen King, and Hong Zhou, PhD, MBA on Wednesday, September 11 at 11:00 am EDT to learn how metadata is critical to the data used in training and operating AI algorithms.